Kind架构及原理

Kind 使用一个 container 来模拟一个 node,在 container 里面跑 systemd ,并用 systemd 托管 kubelet 以及 containerd,然后通过容器内部的 kubelet 把其他 K8s 组件,比如 kube-apiserver、etcd、CNI 等跑起来。

它可以通过配置文件的方式创建多个 container 来模拟创建多个 node,并以这些 node 构建一个多节点的 Kubernetes 集群。

Kind 内部使用的集群部署工具是 kubeadm,借助 kubeadm 提供的 Alpha 特性,它可以部署包括 HA master 的高可用集群。同时,在 HA master 下, 它还额外部署了一个 Nginx,用来提供负载均衡 vip。

Docker 安装

宿主机安装Docker

root@k8s:~# for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do apt-get remove $pkg; doneroot@k8s:~# apt-get update

root@k8s:~# apt-get install ca-certificates curl gnupg

root@k8s:~# install -m 0755 -d /etc/apt/keyrings

root@k8s:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | gpg --dearmor -o /etc/apt/keyrings/docker.gpg

root@k8s:~# chmod a+r /etc/apt/keyrings/docker.gpg

# Add the repository to Apt sources:

root@k8s:~# echo "deb [arch="$(dpkg --print-architecture)" signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu "$(. /etc/os-release && echo "$VERSION_CODENAME")" stable" | tee /etc/apt/sources.list.d/docker.list > /dev/null

root@k8s:~# apt-get update安装Docker

root@k8s:~# apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginKind安装

首先我们下载Kind二进制包

root@k8s:~# wget https://github.com/kubernetes-sigs/kind/releases/download/v0.20.0/kind-linux-amd64

root@k8s:~# mv kind-linux-amd64 /usr/bin/kind

root@k8s:~# chmod +x /usr/bin/kind指定版本号并创建集群

root@k8s:~# cat >kind-demo.yaml<<EOF

kind: Cluster

name: k8s-kind-demo

apiVersion: kind.x-k8s.io/v1alpha4

networking:

disableDefaultCNI: false

nodes:

- role: control-plane

#- role: control-plane

#- role: control-plane

- role: worker

- role: worker

- role: worker

EOF参数详解:

- name 设置集群名称

- networking.disableDefaultCNI 创建的集群默认自带一个轻量级的 CNI 插件 kindnetd ,我们也可以禁用默认设置来安装其他 CNI,比如 Calico。

- nodes control-plance master节点数量

- nodes worker node节点数量

快速创建集群

root@k8s:~# kind create cluster --config=kind-demo.yaml --image=kindest/node:v1.24.15 --name=k8s-kind-demo

日志输出以下

Creating cluster "k8s-kind-demo" ...

✓ Ensuring node image (kindest/node:v1.24.15) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-k8s-kind-demo"

You can now use your cluster with:

kubectl cluster-info --context kind-k8s-kind-demo

Thanks for using kind! 😊安装kubectl

root@k8s:~# curl -LO https://dl.k8s.io/release/v1.24.15/bin/linux/amd64/kubectl

root@k8s:~# chmod +x kubectl

root@k8s:~# mv kubectl /usr/bin/可以查看集群状态

root@k8s:~# kubectl cluster-info --context kind-k8s-kind-demo

Kubernetes control plane is running at https://127.0.0.1:42687

CoreDNS is running at https://127.0.0.1:42687/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'接下来我们就可以get node查看到节点信息

root@k8s:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-kind-demo-control-plane Ready control-plane 2m2s v1.24.15

k8s-kind-demo-worker Ready <none> 90s v1.24.15

root@k8s:~# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-57575c5f89-9ltk6 1/1 Running 0 108s

coredns-57575c5f89-glgvh 1/1 Running 0 107s

etcd-k8s-kind-demo-control-plane 1/1 Running 0 2m7s

kindnet-ljptz 1/1 Running 0 99s

kindnet-qzglw 1/1 Running 0 109s

kube-apiserver-k8s-kind-demo-control-plane 1/1 Running 0 2m5s

kube-controller-manager-k8s-kind-demo-control-plane 1/1 Running 0 2m1s

kube-proxy-kvzx9 1/1 Running 0 109s

kube-proxy-pnzmz 1/1 Running 0 99s

kube-scheduler-k8s-kind-demo-control-plane 1/1 Running 0 2m9sKind 常用命令

查看集群

root@k8s:~# kind get clusters

删除集群

root@k8s:~# kind delete cluster --name 集群名称Kind创建多套集群

实际上只是需要给yaml文件修改即可

root@k8s:~# cat >kind-demo1.yaml<<EOF

kind: Cluster

name: k8s-kind-demo1

apiVersion: kind.x-k8s.io/v1alpha4

networking:

disableDefaultCNI: true #这里开启个cni

nodes:

- role: control-plane

#- role: control-plane

#- role: control-plane

- role: worker

EOF使用下面命令创建集群

root@k8s:~# kind create cluster --config=kind-demo1.yaml --image=kindest/node:v1.28.0 --name=k8s-kind-demo1

日志 输出如下

Creating cluster "k8s-kind-demo1" ...

✓ Ensuring node image (kindest/node:v1.28.0) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-k8s-kind-demo1"

You can now use your cluster with:

kubectl cluster-info --context kind-k8s-kind-demo1

Thanks for using kind! 😊查看集群状态

root@k8s:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-kind-demo1-control-plane NotReady control-plane 3m46s v1.28.0

k8s-kind-demo1-worker NotReady <none> 3m17s v1.28.0

pod状态

root@k8s:~# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5dd5756b68-l94jm 0/1 Pending 0 3m58s

coredns-5dd5756b68-psm7p 0/1 Pending 0 3m58s

etcd-k8s-kind-demo1-control-plane 1/1 Running 0 4m12s

kube-apiserver-k8s-kind-demo1-control-plane 1/1 Running 0 4m11s

kube-controller-manager-k8s-kind-demo1-control-plane 1/1 Running 0 4m12s

kube-proxy-bln4n 1/1 Running 0 3m50s

kube-proxy-l4k49 1/1 Running 0 3m59s

kube-scheduler-k8s-kind-demo1-control-plane 1/1 Running 0 4m16s- 1.24集群版本默认cni插件

- 1.28集群版本不使用默认插件

接下来可以通过kubectl config use-context xx_name快速切换集群

首先获取Cluster名称,我现在在k8s 1.28版本,现在我切换到1.24.15版本

root@k8s:~# kind get clusters

k8s-kind-demo

k8s-kind-demo1切换到k8s-kind-demo集群

root@k8s:~# kubectl config use-context kind-k8s-kind-demo

Switched to context "kind-ak8s-kind-demo".

root@k8s:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-kind-demo-control-plane Ready control-plane 43m v1.24.15

k8s-kind-demo-worker Ready <none> 43m v1.24.15切换到k8s-kind-demo1集群

root@k8s:~# kubectl config use-context kind-k8s-kind-demo1

Switched to context "kind-k8s-kind-demo1".

root@k8s:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-kind-demo1-control-plane NotReady control-plane 36m v1.28.0

k8s-kind-demo1-worker NotReady <none> 36m v1.28.0测试集群

root@k8s:~# cat<<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:alpine

name: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

selector:

app: nginx

type: NodePort

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30001

---

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: centos:v1

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF查看服务状态

root@k8s:~# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/busybox 1/1 Running 0 2m27s

pod/nginx-6fb79bc456-st6qk 1/1 Running 0 2m27s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 48m

service/nginx NodePort 10.96.93.229 <none> 80:30001/TCP 2m28s测试dns

root@k8s:~# kubectl exec -ti busybox -- nslookup kubernetes

Server: 10.96.0.10

Address: 10.96.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1测试nginx nodeport

root@k8s:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-kind-demo-control-plane Ready control-plane 53m v1.24.15

k8s-kind-demo-worker Ready <none> 52m v1.24.15

root@k8s:~# docker exec -it k8s-kind-demo-control-plane bash

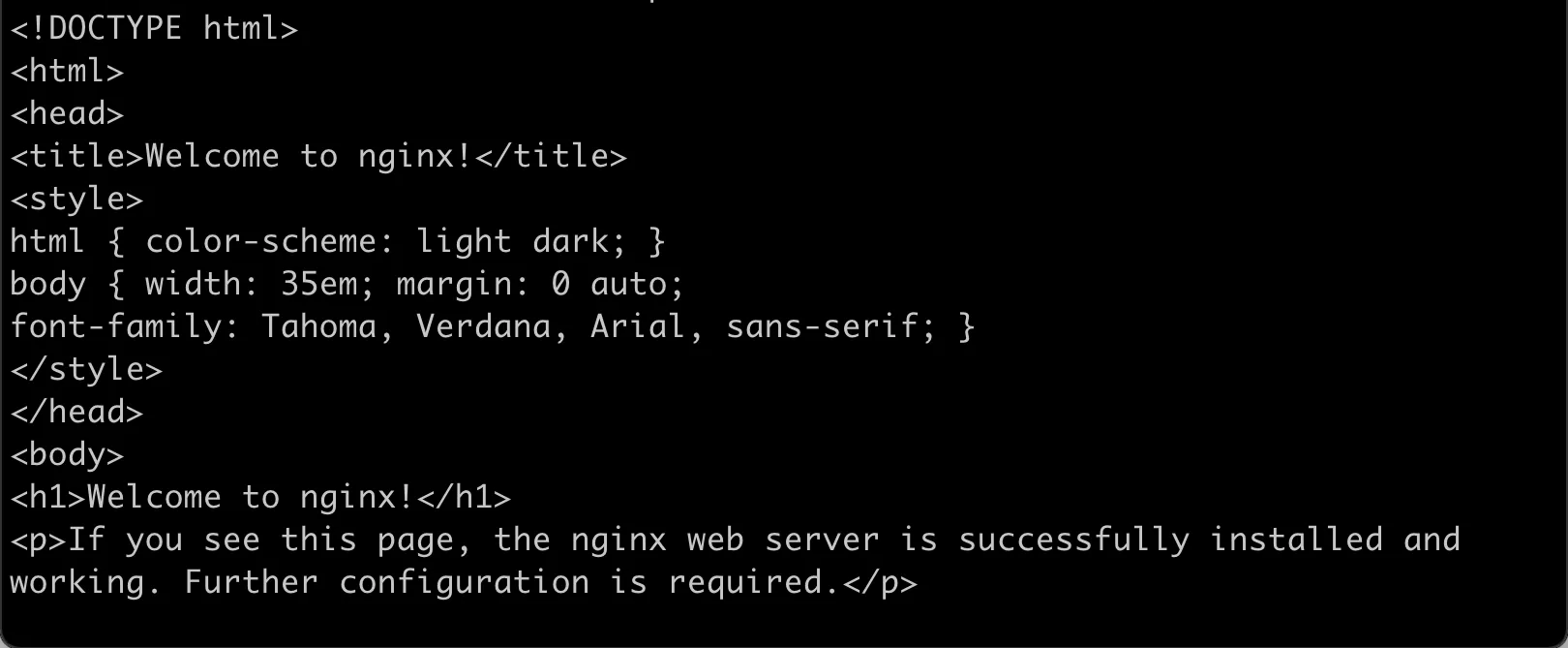

root@k8s-kind-demo-control-plane:/#访问nginx

root@k8s:~# curl 10.96.93.229

蝴蝶神器版传奇私服:重温经典,探索全新冒险之旅:https://501h.com/lianji/20458.html